1 Introduction to Computing and Computer Science

Computers have been around for thousands of years. They just did not have all of the cool flashing lights

nearly every facet of our lives. But as engineers our vocational interest in computer science tends to be more narrow. While we may be interested in designing a more powerful computer, or a more efficient computer, or a more practical computer, it is more likely that we are interested in the computer as a tool; a means of completing a computational task in the most efficient means possible. In this case our interest is not in the computer, but in computing.

Any elementary school students can provide you with a definition of computing; it is adding and subtracting. The high school student would tell you that it is using a computer. And the engineer is likely to return to the arithmetic definition. While these are all true, their definitions are too narrow. We are going to use a more general definition of computing. Computing is the process of turning data into information.

With a definition of computing we can now define a computer. A computer is a tool for computing. A circular definition but it describes the process of using a tool to turn data into information.

This definition is important in part for what it omits. It does not provide any indication or specifics. The computer does not have to be digital or even mechanical. It can be static or dynamic. As long as we can use the tool to turn data into information it is a computer. With this definition of computing we can see that computation has been around for thousands of years.

It should be obvious that the first computers were ourselves. We counted on our fingers and toes. According to archaeologists the idea of counting goes back over fifty thousand years.

If the counts exceeded ten or twenty the ancients learned to make marks or cut notches in sticks.

The term computer actually comes from this. Before mechanical computers, when large scale computation was needed people were hired to do the calculations by hand.

This began in the nineteenth century when the Harvard Observatory needed people to perform tedious arithmetic calculations. Those hired were mainly women who were degreed mathematicians. This continued into the twentieth century and had become famous from its depiction at NASA in the 2016 film Hidden Figures. The job title of these women was computer and the term stuck.

And after we counted if we needed to store the resulting information we put pebbles in a pot or made knots in a string (figure ??). Later, we created ledgers and made notations in those ledgers. These were simple mechanical means of performing calculations and storing the results.

As humanity progressed so did our demands for accuracy and precision. These demands drove innovation in computing. An early innovation came about as a result of mankind’s desire for accurate and repeatable measurements.

Early construction was accomplished using measurements based upon the craftsman’s body parts; the width of a hand, or the length of the foot. This lead to the the cubit – the length of the forearm from the extended middle finger to the elbow. While considered a standardized measurement of approximately 500 mm, it varied by as much as ten percent between different cultures.

Cubit

The cubit was an ancient unit of length that was based roughly on the length of the forearm from the extended middle finger to the elbow. While considered a standard its length varied between cultures.

These measurement tools lacked precision. One carpenter’s foot was significantly different from another’s. Even a skilled craftsman would be inconsistent from one measurement to the next. As a result one of the oldest, simplest, and possibly useful computers was invented – the ruler. A ruler provided a means of precision in measuring linear distances. Rulers also resolved problems with accuracy in measurements. As long ago as five thousand years rulers were being used that were accurately calibrated to a sixteenth of an inch.

Accuracy or Precision

While often taken as a synonyms, accuracy and precision are different. Accuracy is an indication of how close a measure – or the average of many measurements – are to the actual value. Precision is a measure of how close the multiple measurements are to each other.

Of course the computer that we call the ruler – even a homemade ruler – was fine for precision linear measurements. But the ancients were fascinated with angles as well. An ability to measure an angle would open up their study of the movement of the earth, the planets, and the stars. Thus the next computer was created – the protractor. The protractor made it possible to measure distances along an arc. They could then measure movements of planets and stars.

Sundials were not only time pieces. They were also calendars in that they could be used to measure the passage of days and seasons by measuring the length of the shadow cast by the gnomon. The shadow changed throughout the year. By using a ruler and a protractor the ancients turned a clock into a means of computing the time of year and also the position of the sun and thus the angle that the earth made with the sun.

Antikythera Mechanism

Early astronomers made use of protractors as computers that can measure the positions of the stars and the movement of the planets. And while their sextants may not have been mechanical, the data that they collected was used in what is considered to be the first mechanical computer – the Antikythera Mechanism.

The static computers developed in antiquity were meant as measuring devices, but they were in their own way computers. They turned data in to information. But the industrial revolution brought about increasing demands for data collection and analysis.

Antikythera Mechanism

The Antikythera Mechanism is a clockwork mechanism that through the use of gears will show the position of the moon, sun, and planets. It was discovered in 1900 in the wreck of a Roman galley thought to have sunk about 70 BCE. It has been proposed that it was invented by the Greek engineer Archimedes, and is now considered to be history’s first mechanical computer.

These first computers provided their users with a means of measuring distances, locations, and time. They were also storage devices in that they were developed to model the known universe. But they did not compute – at least not in the sense of performing various mathematical calculations of which we think when we discuss computing.

Babbage’s Analytical Engine

While the techniques and devices discussed are all computers, we are interested in computers that provide computational assistance; that is that can perform calculations for us. This type of computing machine is widely attributed to Charles Babbage, a Cambridge mathematician in the early nineteenth century (figure Charles Babbage (1791 – 1871)).

Babbage, in 1812, developed the concept of what he called the difference engine; a mechanical machine that he hoped could be used to solve polynomial equations. He developed a working prototype of his difference engine in 1822, but dropped the project to pursue what was to become the first modern mechanical computer – his analytical engine.

Another mathematician, Ada Lovelace, worked closely with Babbage on the analytical engine. While not given the credit that Babbage received, Lovelace was instrumental in working on the algorithmic processes for the device. As such she is often credited as being the first computer programmer.

For the next hundred years the precursor to the modern computer was mechanization. Typewriters and adding machines were developed to support the industrial revolution. Henry Ford used automation to mass produce automobiles. The mechanical devices created to support the industrial revolution are not commonly thought of as computers, but in their own way they are.

World conflict brought further advances in computing. World Wars I and II brought with them the onset of aviation mechanical devices were developed that optimized how airplanes could be used in combat.

Synchronization Gear

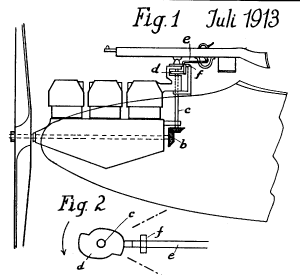

Early in 1914 it became clear that a pilot risked catastrophe when firing a weapon from a moving airplane. If handheld the bullets could impact the pilot’s own airplane. If mounted on the airplane it had to be aimed by the pointing the aircraft into the direction of the target. When mounted directly in front of the pilot the bullets could strike the propellor.

Engineers designed a mechanical computer called a synchronization gear – more commonly called the interruptor – that could determine when the propellor was within the weapon’s line of fire. At that point in the rotation it would disable the firing mechanism (figure French patent drawing for a synchronization gear.).

While still mechanical, the interruptor meets our definition of a computer. It collected data – the arc position of the propellor – and processed it into actionable information – a decision as to whether or not the weapon would be able to fire.

The innovation in mechanical computers brought about by the First World War pales when compared to the Second World War.

Enigma Machine

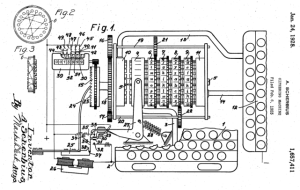

During the 1920s and 30s work had started on the development of advanced means for encrypting text. Generally known as enigma, these mechanical computing devices used a series of rotating gears and plug connectors to encrypt text. It functioned on the idea that if the number of setting permutations are large then the chance of an enemy being able to crack the cypher was very low. While the enigma machine is commonly thought of as a German device, a United States patent was issued for an early enigma machine in  (figure: US patent drawing for the Enigma encryption device).

(figure: US patent drawing for the Enigma encryption device).

Differential Analyzer

Mechanical computers were also developed to provide specific analyses for the war effort. In the First World War, the brand new airplane would fly at fifty knots with a maximum ceiling of several thousand feet. As the Second World War began airplanes were flying at three hundred knots and at altitudes above twenty thousand feet. A flyer could no longer simply point the plane at the target.

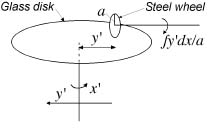

To resolve the issue engineers designed a mechanical computer called the differential analyzer. Its purpose was to solve differential equations. When first designed and implemented in the  it was used to calculate tide tables. With the advent of the second world war it was adapted to calculate artillery trajectories.

it was used to calculate tide tables. With the advent of the second world war it was adapted to calculate artillery trajectories.

The design of the differential analyzer was not new. It was first described in 1876. This early version was meant to perform integration, but as it proceeded it became a device to solve differential equations. It functioned by using a wheel and disk mechanism. The disk would undergo both a rotation and translation. As it rotated a wheel whose edge rested against the disk would turn. It was the rotation of this wheel that represented the output of the integrator.

The output would be a table. These tables were created by a pen drawing a curve on paper. These curves depicted the functional solution of the differential equation.

As the second world war came to an end, advances in electronics, beginning with Fleming’s valve, the first vacuum tube, made way for the development of electronic computers. The vacuum tube provided a means of controlling the flow of current through an electronic circuit. With it, electronic devices proliferated. Sound and visual transmission through radios, telephone, and later television became common.

More directed to our interests, the vacuum tube and its advances in electronics brought with it electronic computing.

ENIAC

The first of these was the Electronic Numerical Integrator and Computer or ENIAC. Designed and built at the University of Pennsylvania. It was completed and began operation in December, 1945 and later dedicated on February 15, 1946. With 20,000 vacuum tubes it could perform a thousand times faster than the previous electro-mechanical computers. While the fastest computer of its time, the vacuum tubes were unreliable with several burning out every day. As such it was only functional about fifty percent of the time.

The ENIAC was developed under a war footing. Much like the differential analyzer its intended purpose was to calculate artillery firing tables for the US Army Ballistic Research Laboratory. It was used primarily for this purpose for ten year until its decommissioning in 1955.

It is often interesting to compare historical characteristics of a device with their current counterparts. The Eniac was quite imposing. It weighed about 25,000 kilograms (27 tons) and had a footprint of 167 square meters (1800 square feet).

With regard to computing power, it could manage twenty 10 digit decimal numbers, and perform 5,000 addition or subtraction operations per second. Much slower at multiplication, it could perform 357 full precision multiplication operations per second. It could, of course, multiply faster if less precision was required for the factors. Full precision quotients could be calculated at a rate of about 35 per second.

Compare that to a modern smartphone that weighs in at a fraction of kilogram and can compute are millions of operations per second.

Whether the computer is a two hundred year old mechanical computer or a modern electronic computer it will have a common trait. There will be the physical device – the gears or circuits – and the set of instructions – the program – that will drive the computer. The physical components are the hardware while the program is the software.

The very first mechanical computers – think back to the Antikythera mechanism – were limited by their design to solve a single problem. Later computers – such as the Eniac and Univac – could be made to perform many different types of computation. But they were still constrained by how the operations – the programs – were entered into their computer.

Those early computers, such as rulers and protractors had their operations fixed into the computer. In these cases the programming was static; once created it could not be changed. To use the rulerYou cannot change the marks on a ruler or a protractor, or add or remove planets from the Antikythera mechanism.

While these devices were programmed, the program – or the algorithm – was built into the machine. The operations were integrated into the design of the computer. The program and the hardware were one and the same.

As a result, these computers did not require a means of programming them once they were built.

Program

The ordered set of instructions that direct a computer on how to complete an algorithm.

But some of the early mechanical computers – Babbage’s analytical engine – and the electronic computers like the ENIAC and UNIVAC were programmable – meaning the instructions it followed could be changed. It could be revised, or replaced completely. This created the idea of a temporary set of specific instructions that would enable a computer to run a particular algorithm. As this was separate from the computer itself – what we now know as the hardware. Further, these instructions were more ethereal. It lacked the physical form of the computer. Since it was not hard like a physical device, it became known as software.

Hardware

None of us can avoid interacting with technology every day. We all interact with a multitude of computer devices every day. On a personal level these might be phones, tablets, a laptop or desktop computer. Moving outward we use online payment systems, and ATMS. The technology that run these devices could be personal but they could be a mainframe computer that we do not even see. The common trait is this these are all examples of hardware; physical devices that run programs.

Hardware

The physical components of which a computer is made

“What you kick when it doesn’t work.”

But hardware is more than just the computer. It also includes all of the physical components of the computer. The processors and the peripherals such as the monitor, the keyboard, the mouse, and the physical storage memory are all hardware. We can designate these by five separate categories

| Component |

Purpose |

| Central Processing Unit |

Performs the operations in the computer |

| Main Memory |

Random access memory (RAM) |

| Secondary Storage Memory |

Non-volatile memory |

| Input Devices |

Devices for entering data into the computer |

| Output Devices |

Devices for retrieving information from the computer |

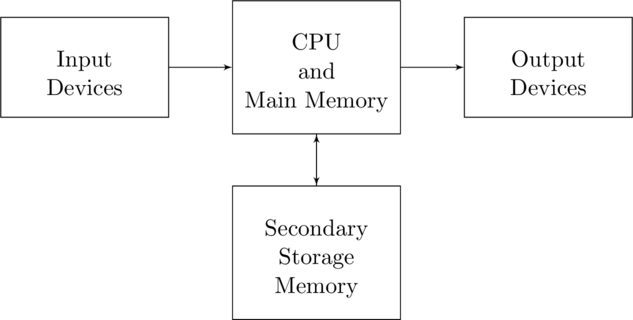

The interaction of the primary hardware components is shown in the figure. Each arrow indicates the possible direction of data traveling – the data stream. Data streams are both unidirectional and bidirectional. The input devices – such as a keyboard or a mouse – can only send data into the processing. Similarly the output – the monitor or a printer – can only receive data from the processor. But the secondary storage, whether it is a hard drive on the computer or cloud storage over a network are bidirectional. The data streams both into the processor from the secondary memory as well as from the processor to the secondary storage.

Interaction between the hardware components of a computer

Software

Hardware is physical, but software is different. Hardware consists of the physical components of the computer, but software is more vacuous; it is the program. While you can read the instructions, you cannot actually hold them.

Software is the set of steps that we follow; what we will call an algorithm or a program. the program on a computer is the language specific set of instructions the computer will follow in completing a task. Program are commonly written in high level languages that can either be compiled to create a single executable file to run on the computer, or can be interpreted one line at a time to complete the task.

But software can also be simple. Measuring the distance between two points involves following a set of instructions. How we do this – put the leading edge of ruler on one point and the flat edge on the other, then read the value off the rule – is the program. In this case we are the computer, our hands and eyes the input device, our brain the processor, and also the secondary memory. The process is the software.

Our goal is not to develop the hardware, but instead the software. The software can be a single line or command – or a complex set of millions of lines of instructions, but the process is the same in that it will collect data and transform it into information.

Almost anyone you ask the question “what is a computer?” will provide a similar answer; a desktop machine, a laptop, a tablet, or perhaps a phone. And while they would be correct in that they are all computers, they are not the limit of computers. Computers are simply devices with which we compute.

A computer can be a modern electronic device, but it can also be our fingers and toes. It is the ruler that we use to measure a line or the protractor to determine an angle. These devices all share a common trait. They take data and process it into information – they compute.

The computer itself can be separated into hardware and software. The hardware is the set of physical components; input devices, processors, output devices, memory. The components each perform a different task such as entering data, processing it, or delivering the information to the user – the results. To make all of the components work together requires software, a set of instructions on how to process the data.

So while computers compute, the challenge can be in how the process is done. Whether it is the task of putting a straight edge to a line or calculating complex beam loadings for a bridge, there will be a specific process we must follow. This is an algorithm. And when we implement the algorithm on a computer, it becomes a program. As we move forward we will need to understand how to develop an algorithm and implement it as a computer program.

- What is computing?

- What is the role of the computer in computing?

- What are examples of the computers used by the ancients?

- Write about the first mechanical computer.

- What is Antikythera Mechanism?

- What was Babbage’s Analytical Engine?

- What is an interruptor in computer terms?

- How did world conflict bring about advances in computing?

- What was the use of ENIGMA machine?

- What was the ENIAC?

- What is an algorithm?

- What is a software? What is a hardware? Give examples.

- Describe the difference between hardware and software. What are examples of each?

- What is the purpose of the Central Processing Unit?

- What is the difference between Main Memory (RAM) and Secondary Memory?

- What is meant by a data stream?

![]() (figure: US patent drawing for the Enigma encryption device).

(figure: US patent drawing for the Enigma encryption device).![]() it was used to calculate tide tables. With the advent of the second world war it was adapted to calculate artillery trajectories.

it was used to calculate tide tables. With the advent of the second world war it was adapted to calculate artillery trajectories.

The Aztec Quipu was a system of strings and knots that were used to store numerical information.

The Aztec Quipu was a system of strings and knots that were used to store numerical information.