Module 14: Multiple and Logistic Regression

Introduction: Multiple and Logistic Regression

Barbara Illowsky & OpenStax et al.

The principles of simple linear regression lay the foundation for more sophisticated regression methods used in a wide range of challenging settings. In this section, we explore multiple regression, which introduces the possibility of more than one predictor, and logistic regression, a technique for predicting categorical outcomes with two possible categories.

Multiple regression extends simple two-variable regression to the case that still has one response but many predictors (denoted x1, x2, x3, …). The method is motivated by scenarios where many variables may be simultaneously connected to an output.

We will consider eBay auctions of a video game called Mario Kart for the Nintendo Wii. The outcome variable of interest is the total price of an auction, which is the highest bid plus the shipping cost. We will try to determine how total price is related to each characteristic in an auction while simultaneously controlling for other variables. For instance, all other characteristics held constant, are longer auctions associated with higher or lower prices? And, on average, how much more do buyers tend to pay for additional Wii wheels (plastic steering wheels that attach to the Wii controller) in auctions? Multiple regression will help us answer these and other questions.

The data set mario_kart includes results from 141 auctions.[1] Four observations from this data set are shown in Table 1, and descriptions for each variable are shown in Table 2. Notice that the condition and stock photo variables are indicator variables. For instance, the cond_new variable takes value 1 if the game up for auction is new and 0 if it is used. Using indicator variables in place of category names allows for these variables to be directly used in regression. Multiple regression also allows for categorical variables with many levels, though we do not have any such variables in this analysis, and we save these details for a second or third course.

| Table 1. Four Observations from the mario-kart data set. | |||||

|---|---|---|---|---|---|

| price | cond_new | stock_photo | duration | wheels | |

| 1 | 51.55 | 1 | 1 | 3 | 1 |

| 2 | 37.04 | 0 | 1 | 7 | 1 |

| . | . | . | . | . | . |

| . | . | . | . | . | . |

| . | . | . | . | . | . |

| 140 | 38.76 | 0 | 0 | 7 | 0 |

| 141 | 54.51 | 1 | 1 | 1 | 2 |

| Table 2. Variables and their descriptions for the mario-kart data set. | |

|---|---|

| Variable | Description |

| price | final auction price plus shipping costs, in US dollars a coded two-level categorical variable, which takes value 1 when the game is new and 0 if the game is used |

| stock_photo | a coded two-level categorical variable, which takes value 1 if the primary photo used in the auction was a stock photo and 0 if the photo was unique to that auction |

| duration | the length of the auction, in days, taking values from 1 to 10 |

| wheels | the number of Wii wheels included with the auction (a Wii wheel is a plastic racing wheel that holds the Wii controller and is an optional but helpful accessory for playing Mario Kart) |

A single-variable model for the Mario Kart data

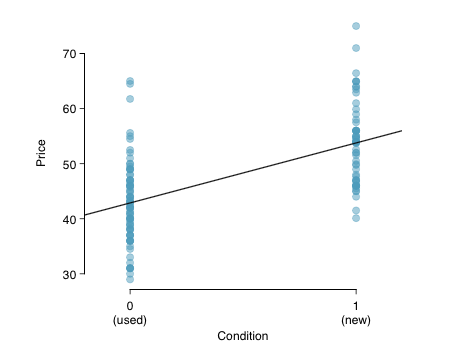

Let’s fit a linear regression model with the game’s condition as a predictor of auction price. The model may be written as

[latex]widehat{text{price}}=42.87 + 10.90timestext{cond_new}[/latex]

Results of this model are shown in Table 3 and a scatterplot for price versus game condition.

| Table 3. Summary of a linear model for predicting auction price based on game condition. | ||||

|---|---|---|---|---|

| Estimate | Std. Error | t value | Pr ( > |t|) | |

| (Intercept) | 42.8711 | 0.8140 | 52.67 | 0.0000 |

| cond new | 10.8996 | 1.2583 | 8.66 | 0.0000 |

| df = 139 | ||||

Try it

Examine the scatterplot for the mario-kart data set. Does the linear model seem reasonable?

Solution:

Yes. Constant variability, nearly normal residuals, and linearity all appear reasonable.

Example

Interpret the coefficient for the game’s condition in the model. Is this coefficient significantly different from 0?

Solution:

Note that cond_new is a two-level categorical variable that takes value 1 when the game is new and value 0 when the game is used. So 10.90 means that the model predicts an extra $10.90 for those games that are new versus those that are used. Examining the regression output in Table 3, we can see that the p- value for cond_new is very close to zero, indicating there is strong evidence that the coefficient is different from zero when using this simple one-variable model.

Including and assessing many variables in a model

Sometimes there are underlying structures or relationships between predictor variables. For instance, new games sold on Ebay tend to come with more Wii wheels, which may have led to higher prices for those auctions. We would like to fit a model that includes all potentially important variables simultaneously. This would help us evaluate the relationship between a predictor variable and the outcome while controlling for the potential influence of other variables. This is the strategy used in multiple regression. While we remain cautious about making any causal interpretations using multiple regression, such models are a common first step in providing evidence of a causal connection.

We want to construct a model that accounts for not only the game condition, as in the mario_kart example, but simultaneously accounts for three other variables: stock photo, duration, and wheels.

[latex]begin{array}widehat{text{price}}hfill &={beta}_{0}hfill &+{beta}_{1}timestext{cond_new}hfill&+{beta}_{2}timestext{stock_photo}text{ }hfill &+{beta}_{3}timestext{duration}hfill&+{beta}_{4}hfill×text{wheels}\hat{y}hfill &={beta}_{0}hfill &+{beta}_{1}{x}_{1}hfill &+{beta}_{2}{x}_{2}hfill &+{beta}_{3}{x}_{3}hfill &+{beta}_{4}{x}_{4}end{array}[/latex]

In this equation, y represents the total price, x1 indicates whether the game is new, x2 indicates whether a stock photo was used, x3 is the duration of the auction, and x4 is the number of Wii wheels included with the game. Just as with the single predictor case, a multiple regression model may be missing important components or it might not precisely represent the relationship between the outcome and the available explanatory variables. While no model is perfect, we wish to explore the possibility that this one may fit the data reasonably well.

We estimate the parameters [latex]{beta}_{0},{beta}_{1},dots,{beta}_{4}[/latex] in the same way as we did in the case of a single predictor. We select [latex]{b}_{0},{b}_{1},dots,{b}_{4}[/latex] that minimize the sum of the squared residuals:

SSE = [latex]displaystyle{{e}_{1}}^{2}+{{e}_{2}}^{2}+dots+{{e}_{141}}^{2}={sum}_{i = 1}^{141}{left({{e}_{i}}right)}^{2} = {sum}_{i = 1}^{141}{left({{y}_{i} - {hat{y}}_{i}}right)}^{2}[/latex]

Here there are 141 residuals, one for each observation. We typically use a computer to minimize the SSE and compute point estimates, as shown in the sample output in the table below. Using this output, we identify the point estimates bi of each i, just as we did in the one-predictor case.

| Table 4. Output for the regression model where price is the outcome and cond new, stock photo, duration, and wheels are the predictors. | ||||

|---|---|---|---|---|

| Estimate | Std. Error | t value | Pr(>|t|) | |

| (Intercept) | 36.2110 | 1.5140 | 23.92 | 0.0000 |

| cond new | 5.1306 | 1.0511 | 4.88 | 0.0000 |

| stock photo | 1.0803 | 1.0568 | 1.02 | 0.3085 |

| duration | −0.0268 | 0.1904 | −0.14 | 0.8882 |

| wheels | 7.2852 | 0.5547 | 13.13 | 0.0000 |

| df = 136 | ||||

Multiple regression model

A multiple regression model is a linear model with many predictors. In general, we write the model as

[latex]hat{y} ={beta}_{0} +{beta}_{1}{x}_{1}+{beta}_{2}{x}_{2}+dots+{beta}_{k}{x}_{k}[/latex]

when there are k predictors. We often estimate the [latex]{beta}_{i}[/latex] parameters using a computer.

Try It

Write out the model

[latex]begin{array}widehat{text{price}}hfill &={beta}_{0}hfill &+{beta}_{1}timestext{cond_new}hfill&+{beta}_{2}timestext{stock_photo}text{ }hfill &+{beta}_{3}timestext{duration}hfill&+{beta}_{4}hfill×text{wheels}\hat{y}hfill &={beta}_{0}hfill &+{beta}_{1}{x}_{1}hfill &+{beta}_{2}{x}_{2}hfill &+{beta}_{3}{x}_{3}hfill &+{beta}_{4}{x}_{4}end{array}[/latex]using the point estimates from the “Output for the regression model where price is the outcome and cond new, stock photo, duration, and wheels are the predictors” table.

How many predictors are there in this model?

Solution:

[latex]hat{y}=36.21+5.13{x}_{1}+1.08{x}_{2}-0.03{x}_{3}+7.29{x}_{4}[/latex], there are k = 4 predictor variables.

Try It

What does [latex]{beta}_{4}[/latex], the coeffcient of variable [latex]{x}_{4}[/latex] (Wii wheels), represent? What is the point estimate of [latex]{beta}_{4}[/latex]?

Solution:

It is the average difference in auction price for each additional Wii wheel included when holding the other variables constant. The point estimate is b4 = 7.29.

Try It

Compute the residual of the first observation from the “Four observations from the mario kart data set” table using the equation you identified in Try It 1.

Solution:

[latex]{e}_{i}= {y}_{i}-{hat{y}_{i}}=51.55 - 49.62 = 1.93[/latex]

Example

A coeffcient for cond_new of b1 = 10.90 was calculated using simple linear regression with one variable, with a standard error of SEb1 = 1.26 when using simple linear regression. Why might there be a difference between that estimate and the one in the multiple regression setting?

Solution:

If we examined the data carefully, we would see that some predictors are correlated. For instance, when we estimated the connection of the outcome price and predictor cond new using simple linear regression, we were unable to control for other variables like the number of Wii wheels included in the auction. That model was biased by the confounding variable wheels. When we use both variables, this particular underlying and unintentional bias is reduced or eliminated (though bias from other confounding variables may still remain).

Example 2 describes a common issue in multiple regression: correlation among predictor variables. We say the two predictor variables are collinear (pronounced as co-linear ) when they are correlated, and this collinearity complicates model estimation. While it is impossible to prevent collinearity from arising in observational data, experiments are usually designed to prevent predictors from being collinear.

Try It

The estimated value of the intercept is 36.21, and one might be tempted to make some interpretation of this coefficient, such as, it is the model’s predicted price when each of the variables take value zero: the game is used, the primary image is not a stock photo, the auction duration is zero days, and there are no wheels included. Is there any value gained by making this interpretation?

Solution:

Three of the variables (cond_new, stock_photo, and wheels) do take value 0, but the auction duration is always one or more days. If the auction is not up for any days, then no one can bid on it! That means the total auction price would always be zero for such an auction; the interpretation of the intercept in this setting is not insightful.

Adjusted R2 as a better estimate of explained variance

We first used R2 to determine the amount of variability in the response that was explained by the model:

[latex]displaystyle{R}^2=1-frac{text{variability in residuals}}{text{variability in the outcome}}=1=frac{text{Var}(e_i)}{text{Var}(y_i)}[/latex]where ei represents the residuals of the model and yi the outcomes. This equation remains valid in the multiple regression framework, but a small enhancement can often be even more informative.

Try It

The estimated value of the intercept is 36.21, and one might be tempted to make some interpretation of this coefficient, such as, it is the model’s predicted price when each of the variables take value zero: the game is used, the primary image is not a stock photo, the auction duration is zero days, and there are no wheels included. Is there any value gained by making this interpretation?

Solution:

Three of the variables (cond_new, stock_photo, and wheels) do take value 0, but the auction duration is always one or more days. If the auction is not up for any days, then no one can bid on it! That means the total auction price would always be zero for such an auction; the interpretation of the intercept in this setting is not insightful.

This strategy for estimating R2 is acceptable when there is just a single variable. However, it becomes less helpful when there are many variables. The regular R2 is a less estimate of the amount of variability explained by the model. To get a better estimate, we use the adjusted R2.

Adjusted R2 as a tool for model assessment

The adjusted R2 is computed as

[latex]displaystyle{R}^2_{adj}=1-frac{frac{text{Var}(e_i)}{n-k-1}}{frac{text{Var}(y_i)}{(n-1)}}=1-frac{text{Var}(e_i)}{text{Var}(y_i)}timesfrac{n-1}{n-k-1}[/latex]where n is the number of cases used to fit the model and k is the number of predictor variables in the model.

Because k is never negative, the adjusted R2 will be smaller—often times just a little smaller—than the unadjusted R2. The reasoning behind the adjusted R2 lies in the degrees of freedom associated with each variance.[2]

Try It

There were n = 141 auctions in the mario_kart data set and k = 4 predictor variables in the model. Use n, k, and the variances from Try It 6 to calculate R2adj for the Mario Kart model.

Solution:

[latex]displaystyle{R}^2=1-frac{23.34}{83.06}timesfrac{141-1}{141-4-1}=0.711[/latex]

Try It

Suppose you added another predictor to the model, but the variance of the errors Var(ei) didn’t go down. What would happen to the R2? What would happen to the adjusted R2?

Solution:

The unadjusted R2 would stay the same and adjusted R2 would go down.

Adjusted R2 could have been used earlier. However, when there is only k = 1 predictors, adjusted R2 is very close to regular R2, so this nuance isn’t typically important when considering only one predictor.

- Diez DM, Barr CD, Cetinkaya-Rundel M. 2015. openintro: OpenIntro data sets and supplement functions. github.com/OpenIntroOrg/openintro-r-package. ↵

- In multiple regression, the degrees of freedom associated with the variance of the estimate of the residuals is n − k − 1, not n − 1. For instance, if we were to make predictions for new data using our current model, we would find that the unadjusted R2 is an overly optimistic estimate of the reduction in variance in the response, and using the degrees of freedom in the adjusted R2 formula helps correct this bias ↵